August 14, 2025

5 min read

@BnkInfoSecurity

The Looming Threat of AI Agent-Powered Attackers

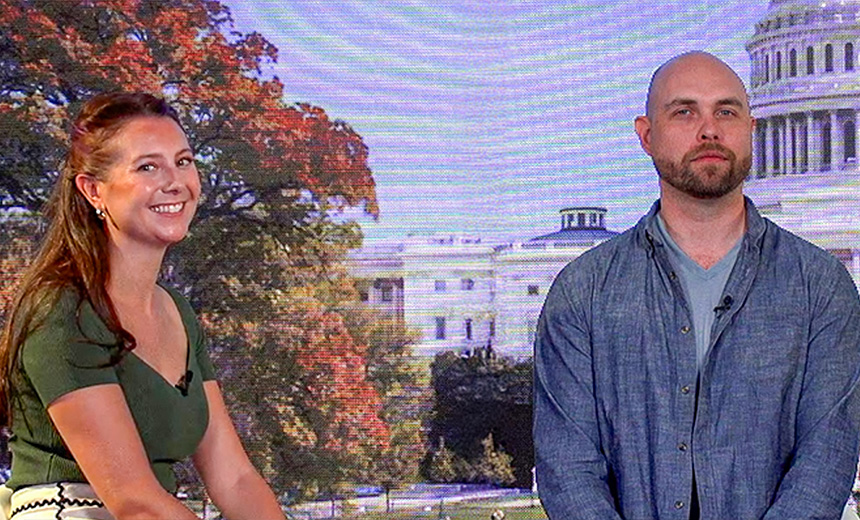

The transition of artificial intelligence-powered agents from laboratory research to active deployment is reshaping the cyberthreat landscape. Recent field evidence shows threat actors are already integrating large language models into offensive operations, with Ukrainian CERT documenting APT28 malware using natural language tasking. "What will be transformative is when you start seeing the decision-making of the human being handed off to machine reasoning," said Gianpaolo Russo, head of AI and autonomous cyber operations at Mitre. This paradigm shift enables adversaries to operate at machine speed across multiple network locations simultaneously, fundamentally challenging traditional defensive approaches. The implications extend beyond speed to scale and sophistication. "Large language models have this incredible dual nature, to not only ingest natural language, but also use that and create more machine-readable types of code or tunnel scripting," said Marissa Dotter, lead AI engineer at Mitre. In a video interview with Information Security Media Group at Black Hat USA 2025, Dotter and Russo also discussed:- The role of digital twin environments in testing autonomous AI agents;

- How AI agents demonstrate self-improvement capabilities through performance optimization;

- The computational resource requirements of AI-powered malware and their implications for detection strategies. Russo is an applied researcher solving hard cyber problems at the Mitre Corporation. His interdisciplinary research experience has spanned the reverse engineering and analysis of embedded and cyber-physical systems, the development of distributed sensor networks, mobile network communications analysis, vulnerability disclosure policy, and applied behavioral science. At Mitre, Dotter leads AI research and development in machine learning applications such as computer vision, natural language processing, and acoustics. With more than eight years of experience, she oversees projects including AI system assurance and autonomous cyber operations.

- AI Agents Are Broken: Can GPT-5 Fix Them?

- AI Disruption Accelerates Market Shifts

- AI-Powered Crypto Scams Surge 456%

Source: Originally published at BankInfoSecurity on August 14, 2025.